Strong Foundation: Why Data is the True Determinant of AI Preparedness

Companies are eager to realize the promise of Artificial Intelligence, with executives acknowledging that AI can drive revenue growth and that swift adoption is essential to keep pace with competitors. Yet, despite increased investment and activity, most organizations are struggling to achieve significant financial benefits from AI initiatives.

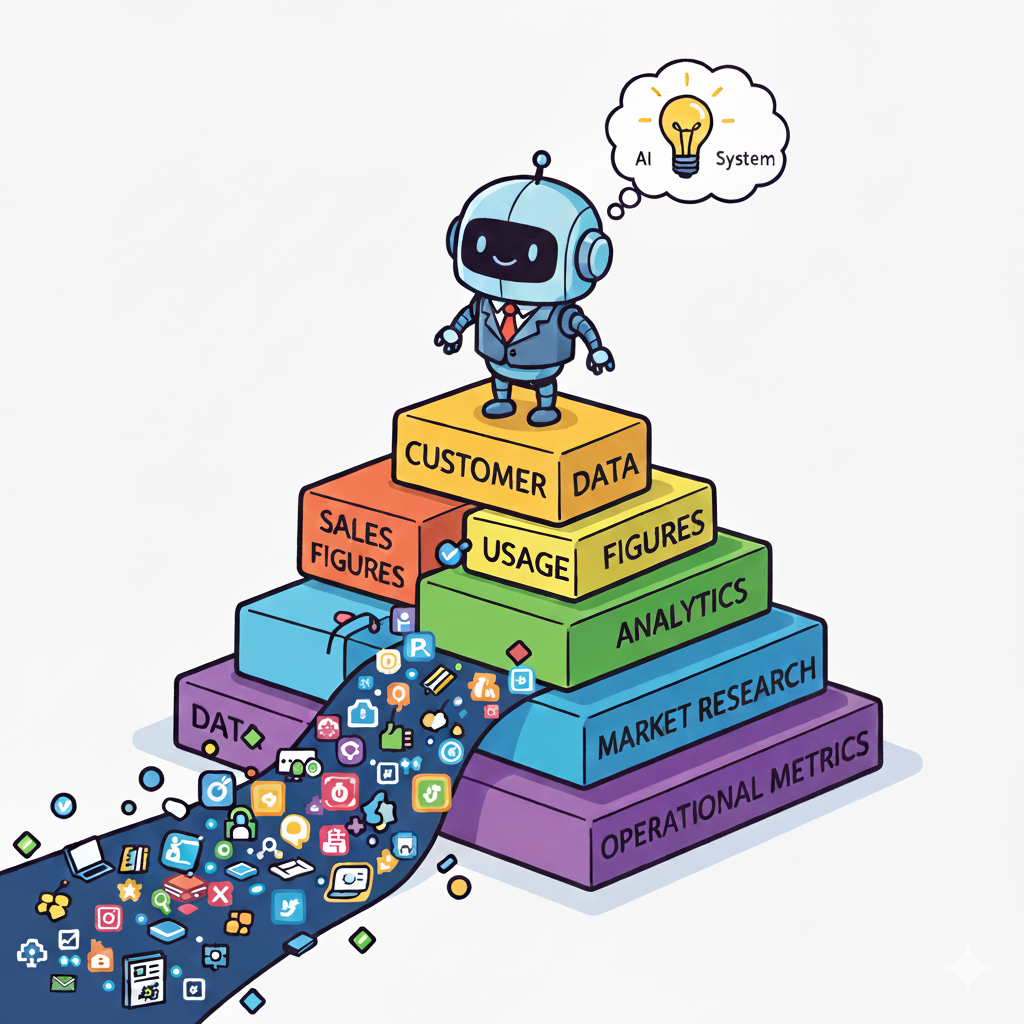

If AI ambition is your goal, data is the foundation that dictates whether you reach it. For many businesses, the measurable benefits of AI remain frustratingly out of reach due to unstable data foundations. Readiness is not simply a matter of having the latest tools or hiring a large data science team; rather, it hinges on having built an entire data ecosystem defined by strong governance, quality, and accessibility. Leaders succeeding in the AI race are those who undertake the essential, often unglamorous, task of organizing their data infrastructure first.

Why Data is the Lifeblood—and the Biggest Barrier

Data is universally considered the lifeblood of AI. This dependency means that AI models are only as good as the information they consume. Ignoring the material reality of your data foundation is one of the most common pitfalls in AI preparedness, leading to the dangerous assumption that access to data equates to its fitness for purpose.

This flawed assumption quickly translates into the principle of "garbage in, garbage out". Industry surveys consistently show that low-quality data—meaning information that is incomplete, inaccurate, or outdated—is a top barrier to successful AI implementation because it undermines analytic efforts and erodes trust in the resulting insights.

Furthermore, in organizations at the "Initial" or "Foundational" maturity level, core data sources are frequently siloed and challenging to access. This data fragmentation limits the effectiveness of AI initiatives by preventing organizations from developing the comprehensive view necessary for sophisticated use cases, such as personalized customer experiences. When automation is layered onto this unstable data structure, problems are created faster, often compromising customer experience and diminishing employee trust.

The Non-Negotiable Pillars of a Solid Data Foundation

To move beyond isolated experiments to enterprise-wide operations, organizations must establish robust Data Foundation Readiness. This transformation is not about elegant diagrams but about achieving observable outcomes, such as meeting freshness and quality Service Level Agreements (SLAs) and reducing access cycle times.

The maturity model emphasizes establishing formal governance structures, standardized data architecture, and clear ownership. This governance is necessary to ensure the continuous availability, usability, and integrity of data while actively guarding against data bias.

Four elements are absolutely non-negotiable for achieving a production-grade data foundation:

1. Accountability Through Data Ownership and Stewardship

Every critical dataset, or "data product," must have a named business owner and a dedicated steward who possesses the decision authority and allocated time to maintain the asset. This ownership is crucial for enforcing quality SLAs and quickly resolving access requests. If a critical input for a top use case lacks an accountable data owner, it triggers a gating failure that prevents the Data readiness score from advancing above a basic level (Level 2.0). Without clear ownership, data access, quality, and lineage inevitably fail, stalling the AI program.

2. Data Productization and Measurable Quality

The shift from treating data as ad-hoc tables and folders to defining it as high-value data products is central to readiness. These products must come with clear contracts, defining schemas, versioning, and enforceable SLAs for quality (e.g., completeness, uniqueness, referential integrity) and freshness.

Rather than attempting to fix all data quality problems at once, organizations should prioritize high-value domains, such as customer, product, and financial data. These efforts must be sustained by automated validations and continuous monitoring, ensuring attainment of freshness targets, often set at >95% over a 90-day window.

3. Metadata, Catalog, and Lineage

Practitioners must be able to discover and trust the data assets available. This requires a searchable catalog containing business and technical metadata, coupled with accurate, automated lineage tracking the data flow from source to consumption. The catalog must visibly document the classification (e.g., PII/PHI/PCI) and last-updated timestamps to maintain practitioner adoption.

4. Access Controls, Security, and Privacy

Security and privacy must be embedded, not bolted on. Access must adhere to the principle of least-privilege, enforced through column- or row-level security, masking, tokenization, and auditable logs. Data security policies must also be updated to address new types of vulnerabilities introduced by AI, such as adversarial machine learning.

The Data Challenge of Generative AI

The rise of Generative AI (GenAI) introduces new and distinct data readiness challenges, particularly concerning Retrieval-Augmented Generation (RAG). For RAG to function reliably, organizations must treat their internal knowledge base—the knowledge corpus—as a governed data asset.

The key data readiness requirements for GenAI include:

- Corpus Governance: Knowledge corpora must be curated, classified, and governed through their entire lifecycle (ingestion, chunking, embedding, re-indexing). Sensitive documents must be explicitly excluded or masked.

- Measurable Retrieval Quality: Before external exposure, organizations must define and enforce retrieval quality thresholds (e.g., precision/recall) and grounding/attribution standards. The absence of a measurable retrieval-quality gate is a critical gating failure that caps the readiness score.

- Prompt/Output Protection: The inputs (prompts) and outputs must be logged, protected, and redacted according to privacy policy. Furthermore, organizations must ensure that training opt-outs for third-party models are enforced, and that a tested path exists to delete/purge prompts, outputs, and embeddings upon a Data Subject Access Request (DSAR).

The decision to scale AI safely, responsibly, and with measurable business impact rests squarely on your organization's willingness to invest in these data fundamentals. Are you building your AI aspirations on solid foundations or unstable ground? The time to act on the data foundation is now.

Three Practical Recommendations for Data Readiness

Based on the common challenges and foundational requirements of AI maturity models, here are three practical steps your organization can implement immediately to bolster your data readiness:

1. Enforce Gating on Data Ownership

Action: Conduct a mandate that 100% of the critical datasets (data products and GenAI corpora) required for your top three strategic AI use cases must have a named, accountable business owner and steward.

Why it matters: The lack of an accountable owner for critical data is a non-negotiable failure that caps your AI scaling efforts. Ownership drives accountability for quality, ensures rapid decision-making on access requests, and prevents data decay. If a critical dataset lacks an owner, funding for the dependent AI initiative must be paused until the ownership gap is closed.

2. Prioritize and Measure Data Quality with SLAs

Action: Stop chasing perfect quality everywhere. Instead, define Data Quality and Freshness SLAs only for your highest-value data domains (e.g., customer, financial, product data). Instrument dashboards to continuously track SLA attainment (e.g., $\ge95\%$ freshness) over a 90-day window.

Why it matters: Measurable quality linked to business value prevents "garbage in, garbage out". By focusing on SLAs, you gain immediate visibility into data fitness for purpose, allowing technical teams to address reliability issues proactively, turning reactive cleanup into predictable data stewardship.

3. Operationalize GenAI Privacy and Retrieval Gates

Action: For any Generative AI or RAG use case, establish and test the "safe-to-operate" minimum for data protection. Implement mandatory pipeline checks (policy-as-code) to ensure prompts and outputs are logged, protected, and redacted. Simultaneously, deploy a retrieval evaluation harness to measure the grounding and retrieval quality of your knowledge corpus.

Why it matters: GenAI introduces high-risk legal and ethical exposure (e.g., data leakage, hallucination, privacy violations). Logging and protecting prompts/outputs is necessary for auditability and compliance, including testing the path for DSAR purges. Furthermore, if your RAG implementation lacks a measurable retrieval-quality gate, your organization is deploying systems that cannot guarantee accurate answers, leading to reputational and operational failure.

Don’t know where to start? Let’s talk. You don’t have to go on this AI journey alone.